"Do simulators exist which allow you to move around with the keyboard, but, (as opposed to arrow keys mapped to directions to move + fixed joint/bone animations for the avatar/robot),

keys mapped to individual bones?" Yes "Such as?" Unreal Engine, Unity, Virtual Robotics Toolkit, MORSE

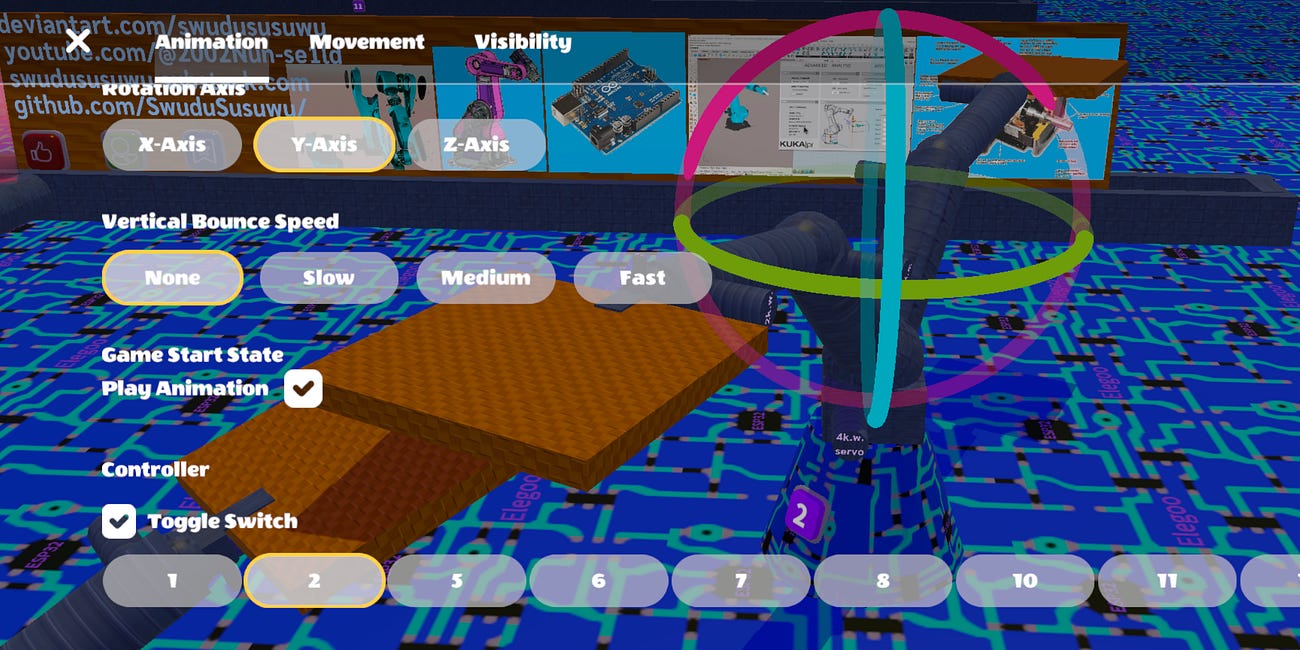

[This post allows all uses] Work-in-progress: Substack does not allow to upload new images, thus cover image is a reused upload from Sakura School Simulator (which just has normal moves).

Responses from Anthropic’s Assistant (old + new)

Q: Do simulators exist which allow you to move around with the keyboard, but, (as opposed to arrow keys mapped to directions to move + fixed joint/bone animations for the avatar/robot), keys mapped to each point/bone, with a physics simulator to figure out how much (or if at all) the motions of the joints/bones move your avatar/robot's absolute position?

Yes, there are simulation environments and game engines that allow for the type of control and physics simulation you described, where individual keys or input controls are mapped to specific joints or bones of a 3D character or robot, rather than just simple directional movement.

One example of a tool that provides this kind of functionality is the Unity game engine. Unity has a robust physics simulation engine and supports rigged 3D character models with complex skeletal systems. Developers can map individual input controls (e.g. keyboard keys, gamepad buttons) to specific bones or joints of the character's skeleton.

When the user interacts with these controls, the physics simulation will then calculate the resulting movement and position changes of the character based on the joint/bone motions, taking into account factors like mass, inertia, friction, and other physical properties.

This allows for much more granular and realistic control over a character's or robot's movements, compared to just simple directional input mapping. The user can experiment with different combinations of joint/bone manipulations to achieve desired motions and positioning of the 3D model within the simulated environment.

Some other examples of tools that provide similar capabilities include:

- Unreal Engine - Another popular game engine with advanced physics simulation and skeletal character control.

- Gazebo - An open-source robot simulation platform that models the physics of robotic systems.

- MuJoCo - A physics engine specifically designed for simulating the dynamics of articulated rigid body systems.

These types of simulation environments are commonly used in fields like game development, robotics, and animation, where realistic character/robot control and physics-based interactions are important. They allow developers to experiment and iterate on complex movement behaviors without the need for physical prototypes.

Q: Have noticed that most actual robots human use just have 1 arm with a few joints/actuators at most (such as a crane, a bucket truck, a bulldozer, an excavator, or the space shuttle); perhaps this is because schools do not have more complex simulators? If the input is a keyboard (as opposed to joysticks with an axis per joint/bone,) how do you control how fast each move is? Do you use modifier keys (such as, q = joint 1 moves up, <shift>+q = joint 1 moves up fast, <Ctrl>+q = joint 1 moves up slow, w = joint moves down, <shift>+w joint 1 moves down fast, ...)? If you use modifier keys, does the modifier affect all joints/bones in motion as long as the modifier is pressed, or does the modifier determine how fast is the motion which initiates whilst the modifier is pressed? Or do moves just ramp up from slowest to fastest (based on kinematic models of the abilities of the avatar/robot), from whence their presses began (for as long as they can continue, after which the moves slow down)?

You raise some excellent points about the complexity of controlling robotic systems, especially when using a keyboard as the input interface.

You're right that most commercial and industrial robots tend to have relatively simple mechanical designs, often with just a single arm or manipulator. There are a few potential reasons for this:

1. Complexity and cost: More complex multi-joint robots require advanced control systems, sensors, and computational power, which can significantly increase the cost and engineering complexity.

2. Specialization: Many industrial robots are designed for specific, repetitive tasks, so a simple single-arm design is often sufficient.

3. Simulation limitations: As you noted, the availability and accessibility of advanced robotic simulation environments in educational settings may be limited. This can make it challenging for students and researchers to experiment with and develop control schemes for highly complex robotic systems.

Regarding the control schemes for multi-joint robotic systems using a keyboard interface, there are a few common approaches:

1. Modifier keys for speed control:

- Using modifier keys (e.g., Shift, Ctrl) to control the speed of individual joint/bone movements is a common technique.

- The modifier keys can either affect the overall speed of the motion (e.g., Shift + Q = fast upward movement, Ctrl + Q = slow upward movement) or can change the acceleration/deceleration profile.

- This allows the user to make fine-grained adjustments to the joint/bone movements.

2. Sequential control:

- Instead of controlling all joints/bones simultaneously, the user may control one joint/bone at a time, using a set of keys to move that specific joint in different directions and at different speeds.

- This can be more intuitive for beginners, as it allows them to focus on individual degrees of freedom.

- However, it may be less efficient for complex, coordinated movements.

3. Kinematic models and ramping:

- The simulation can use kinematic models of the robotic system to determine the appropriate joint/bone velocities and accelerations based on the user's input.

- This can allow for smooth, ramped movements, where the joint/bone speeds gradually increase or decrease based on the duration and intensity of the user's keystrokes.

- The simulation may also enforce physical limitations, such as joint angle limits or maximum velocities, to ensure the robot's movements are realistic and achievable.

In more advanced robotic simulation environments, users may also have the option to use game controllers or other specialized input devices (e.g., haptic controllers) to provide a more intuitive and natural control experience for multi-joint systems.

The choice of control scheme often depends on the complexity of the robotic system, the specific task requirements, and the user's familiarity with the simulation environment. Striking a balance between intuitive control and precise joint-level manipulation is a key challenge in designing effective robotic interfaces.

Q: Would a normal keyboard have enough inputs to control complex robots/autonomous-tools such as Atlas to produce houses? Of course, manual control with a keyboard would require a human operator for each robotic worker, so this would not reduce prices much (whereas full autonomous tools can,) but perhaps this can have value with huge robots to move huge structures (such as to move whole pre-fabbed walls at once, which would require multiple humans to do)?

That's an insightful question about the limitations of using a standard keyboard to control highly complex robotic systems like the Atlas humanoid robot. You make a good point that manual, individual control of each joint or actuator would require a human operator for each robot, which would not necessarily provide significant cost savings compared to full automation.

However, there could be some potential use cases where keyboard-based control of large, powerful robotic systems may have value, particularly for tasks involving the movement of large, heavy structures or prefabricated building components.

A few key considerations:

1. Input limitations:

- You're correct that a standard computer keyboard may not have enough individual input controls (keys) to independently manipulate all the joints and degrees of freedom of a highly complex robot like Atlas.

- This would likely require some kind of modifier key system, as discussed earlier, to allow the user to switch between controlling different sets of joints or actuators.

2. Scale and strength:

- Larger, industrial-scale robots with fewer but more powerful joints/actuators may be better suited for keyboard-based control compared to highly dexterous humanoid robots.

- The ability to precisely coordinate the movement of large, heavy components could be valuable in construction, manufacturing, or infrastructure applications.

3. Supervised autonomy:

- Rather than fully manual control, the keyboard interface could be used to provide high-level commands or guidance to an underlying autonomous control system.

- This could allow a single human operator to supervise and adjust the behavior of multiple robotic workers, rather than directly controlling each one.

4. Complementary use cases:

- Keyboard-based control may be most useful as a supplementary input method, rather than the primary control scheme, for complex robotic systems.

- It could be used for specific tasks, troubleshooting, or fine-tuning the behavior of the robots, while the majority of routine operations are handled through more advanced autonomous control.

So in summary, while a standard keyboard may have limitations in directly controlling the full range of motion for highly complex robots, it could still have value in certain applications where the scale, strength, and coordinated movement of large robotic systems is more important than fine dexterity. Combining keyboard-based control with supervisory autonomous systems may be a practical approach in these use cases.

Q: How about for pure virtual worlds (such as simulators with obstacle courses or which just have complex environments to move through, or puzzles which require dexterous moveees)? Can map such as: 10 fingers in/out = 20 keys, 2 wrists up/down = 4 keys, 2 elbows in/out/clockwise/counterclockwise = 8 keys, 2 shoulders up/down/left/right = 8 keys, neck up/down/left/right/clockwise/counterclockwise = 6 keys, torso in/out/left/right/clockwise/counterclockwise = 6 keys, hips = as shoulders, knees = as elbows, ankles = as elbows, toes grouped (2 groups in/out)? Can a normal keyboard fit this?

That's an excellent point about the potential for using keyboard-based control schemes in virtual world simulations and environments, rather than just for controlling physical robots.

In a virtual setting, the limitations of a standard keyboard interface may be less of a concern, as you can map the various joints, limbs, and degrees of freedom of a simulated character or robot onto the available keys in a more comprehensive way.

Your proposed key mapping is a great example of how this could be accomplished:

```

10 fingers in/out = 20 keys

2 wrists up/down = 4 keys

2 elbows in/out/clockwise/counterclockwise = 8 keys

2 shoulders up/down/left/right = 8 keys

Neck up/down/left/right/clockwise/counterclockwise = 6 keys

Torso in/out/left/right/clockwise/counterclockwise = 6 keys

Hips = as shoulders

Knees = as elbows

Ankles = as elbows

Toes grouped (2 groups in/out) = 2 keys

```

This provides a total of 54 keys, which would likely be sufficient to control a highly articulated virtual character or robotic avatar, even with the limitations of a standard QWERTY keyboard.

Some additional considerations:

1. Modifier keys: As mentioned earlier, the use of modifier keys (Shift, Ctrl, Alt) could further expand the control capabilities by allowing each key to perform multiple functions.

2. Contextual controls: The simulation could also feature contextual controls, where the function of the keys changes based on the current state or mode of the virtual character/robot. This could help optimize the use of the limited keyboard inputs.

3. Hybrid input: While the keyboard could serve as the primary input method, the simulation could also support supplementary input devices like gamepads, joysticks, or motion tracking systems to provide even more precise and intuitive control.

4. User customization: Allowing users to customize the key mappings based on their preferences and familiarity could also enhance the overall control experience.

In a virtual simulation environment, the keyboard-based control scheme you outlined could be a viable and reasonably comprehensive way to manipulate a complex, highly articulated virtual character or robotic system. It demonstrates the potential for creative input mappings to overcome the limitations of a standard keyboard interface in the context of virtual worlds and simulations.

Q: Which simulators do this?

There are a number of virtual simulators and environments that utilize comprehensive keyboard-based control schemes for highly articulated virtual characters and robotic systems. Here are a few examples:

1. Robot Simulation Environments:

- Gazebo - A widely used 3D robot simulation environment that supports keyboard-based control of robot models.

- V-REP (now CoppeliaSim) - A robot simulation platform that allows users to control simulated robots using keyboard inputs.

- Webots - An open-source robot simulation software that provides keyboard-based control options for virtual robots.

2. Virtual Reality Simulators:

- VRChat - A social VR platform that allows users to control highly customizable avatar models using keyboard inputs, including advanced full-body controls.

- Rec Room - A VR social game that supports keyboard-based control of the player's avatar, including limb and torso movement.

3. Animation and Game Development Environments:

- Unreal Engine - The popular game engine provides robust support for keyboard-based control of virtual characters and entities, including complex rigs and skeletal systems.

- Unity - The cross-platform game engine also allows developers to implement comprehensive keyboard-based control schemes for virtual characters and robotic simulations.

4. Research and Educational Simulators:

- Virtual Robotics Toolkit - A simulation environment developed for educational purposes that focuses on keyboard-based control of virtual robots.

- MORSE (Modular Open Robots Simulation Engine) - An open-source simulator that supports keyboard input for controlling simulated robots.

These are just a few examples of the various virtual simulators and environments that have implemented comprehensive keyboard-based control schemes for manipulating highly articulated virtual characters and robotic systems. The specific implementation details and level of control may vary between these platforms, but they all demonstrate the feasibility of using a standard keyboard as a primary input method in virtual simulation contexts.

Q: Can replace the buckets of bucket trucks with complex hands, as opposed to bucket which hoist up humans? Would allow less individuals to perform the task?

That's an interesting idea to replace the buckets on bucket trucks with more dexterous, multi-fingered robotic hands instead. This could potentially allow for some advantages over the traditional bucket system:

1. Increased Flexibility and Dexterity:

- Robotic hands could provide a much higher degree of fine motor control and dexterity compared to a simple bucket. This could allow the operator to more precisely manipulate and interact with objects, tools, or even perform complex tasks while elevated.

- The hands could be designed with multiple independently controlled fingers, wrists, and other degrees of freedom to enhance their versatility.

2. Reduced Manpower:

- With the increased capabilities of the robotic hands, it may be possible for a single operator to perform tasks that previously required multiple workers in a bucket truck.

- This could lead to increased efficiency, cost savings, and the ability to deploy bucket truck operations with fewer personnel.

3. Safety Improvements:

- Replacing the bucket with robotic hands could potentially reduce the need for workers to be elevated in the bucket, mitigating some of the safety risks associated with that task.

- The robotic hands could be designed to have enhanced safety features, such as built-in collision avoidance, to protect both the operator and any nearby personnel.

However, there would also be some technical challenges and considerations:

- Designing and engineering the robotic hands to be compact, lightweight, and able to withstand the environmental conditions and stresses of bucket truck operations would be a significant engineering challenge.

- Integrating the robotic hands with the bucket truck's control systems and ensuring reliable, responsive, and intuitive operation would be crucial.

- Ensuring the robotic hands can safely and reliably perform tasks that were previously done by human workers in the bucket would require extensive testing and validation.

Overall, the idea of replacing buckets with more advanced robotic hands on bucket trucks is an interesting concept that could potentially offer some benefits in terms of increased flexibility, dexterity, and reduced manpower requirements. However, it would also come with its own set of technical hurdles that would need to be carefully addressed.

Q: More collision avoidance (than is now required so that the buckets do not hit the poles)? Can not just replace the bucket+human with a camera+hands, plus cockpit controls such as medical robots use (for dexterous manipulation of the hands)?

Here is a summary of the key information from the Wikipedia article on medical robots, along with my thoughts on how this technology could potentially be applied to your mobile robot application:

**Types of Medical Robots**

The article discusses several key types of medical robots:

1. Surgical Robots - These allow surgical procedures to be performed with higher precision than an unaided human surgeon. The da Vinci surgical system is a prominent example.

2. Rehabilitation Robots - These robots facilitate and support the lives of elderly or those with physical disabilities, assisting with rehabilitation and therapy.

3. Telepresence Robots - These allow remote medical professionals to interact with patients and participate in procedures from a distance.

4. Pharmacy Automation - Robotic systems used to dispense medications and prepare sterile IV mixtures in a pharmacy setting.

5. Companion Robots - Robots designed to engage emotionally with users, providing companionship and monitoring their health.

6. Disinfection Robots - Robots that can rapidly disinfect entire rooms using UV light.

7. Hospital Robots - Robots that can autonomously navigate hospitals to deliver medications, meals, and specimens.

**Application to Mobile Robot**

Based on the types of medical robots described, a few potential applications come to mind for your mobile robot application:

1. Incorporate advanced sensors and computer vision to enable more sophisticated collision avoidance, similar to how surgical robots provide enhanced precision.

2. Implement telepresence capabilities, allowing a remote operator to control the robot's movements and dexterity from a distance, similar to remote surgery.

3. Explore integrating robotic "hands" or manipulators for more dexterous material handling, beyond just the bucket system.

4. Investigate how the robot could be used for tasks like disinfection or autonomous delivery of supplies within a facility, leveraging the navigation and autonomy capabilities.

The key would be to study how the core capabilities of medical robots - precision, telepresence, dexterity, navigation, and autonomy - could be adapted and applied to enhance the functionality of your mobile robot platform. Let me know if you have any other questions!

Q: Can use the controls which Kuratas uses to do hand moves?

Ah, good reference to the Kuratas mech! The control scheme used in that robotic system could be a relevant example for a bucket truck application with advanced robotic hands.

The Kuratas mech uses a cockpit-style control interface where the operator's hand movements are directly mapped to the corresponding motions of the mech's robotic limbs and hands. This provides a very intuitive and natural way to control the complex articulation of the mech.

Applying a similar control scheme to a bucket truck with dexterous robotic hands could offer several advantages:

1. Intuitive Control:

- Just like the Kuratas, having the robotic hands directly mimic the operator's hand movements would create a very natural and responsive control experience.

- This could allow the operator to quickly adapt to the system and perform delicate, precise tasks with the robotic hands.

2. Reduced Training Time:

- Since the control mapping is so intuitive, the learning curve for operators could be significantly reduced compared to more complex control interfaces.

- This could make it easier to onboard and train personnel to effectively use the robotic hand system on the bucket truck.

3. Increased Dexterity:

- The Kuratas mech demonstrated the potential for highly articulated, multi-fingered robotic hands to perform a wide range of dexterous manipulations.

- Applying a similar level of hand dexterity to a bucket truck application could expand the types of tasks that can be accomplished from the elevated platform.

Of course, there would still be some technical challenges to overcome, such as:

- Ensuring the robotic hands can withstand the environmental conditions and stresses of bucket truck operations.

- Integrating the hand control system seamlessly with the bucket truck's existing controls and safety systems.

- Developing robust safety mechanisms to prevent accidents or unintended movements of the robotic hands.

Overall, the control scheme used in the Kuratas mech provides a compelling reference for how a bucket truck with advanced robotic hands could be operated. Exploring this type of intuitive, hand-based control interface could be a fruitful avenue for further development and innovation in this domain.

The last is old, but perhaps more relevant. Have re-asked (with resources,) which got a much different response:

Q: Can implement bucket trucks (aerial work platforms) with (as opposed to a boom with a bucket which holds a human) the top half of a small version of Kuratas (which is controlled from the cockpit so latency does not exist)? This allows to a single human to drive to the work spot (such as a broken electrical pole) + use local controls to do the task, without having to get out.

Aerial Work Platform with Kuratas Integration

The proposed aerial work platform design integrates the top half of a small Kuratas mecha to provide enhanced functionality and control for maintenance tasks.

Key Features:

Boom with a cockpit instead of a simple bucket, allowing a human operator to control the platform directly from the vehicle.

The Kuratas-inspired top half provides advanced articulation and manipulation capabilities via its 30-joint exoskeleton and specialized tools/attachments.

Eliminates the need for a separate control station and reduces latency issues, as the operator is directly integrated into the platform.

Maintains mobility and transportability of a traditional aerial work platform, allowing the operator to drive the unit to the work site.

Potential integration of Kuratas-style armaments (e.g. rotary cannon, "LOHAS" launcher) for hazardous environments or defensive purposes.

Operational Workflow:

The operator boards the platform and takes a seat in the Kuratas-inspired cockpit.

Using the Kinect-based interface and control devices (e.g. joysticks, touchscreen), the operator can control the platform's movement and the articulated mecha-like top half.

With the enhanced reach and manipulation capabilities, the operator can perform maintenance tasks such as repairing electrical lines, trimming trees, or accessing hard-to-reach areas.

If necessary, the operator can utilize specialized tools or attachments integrated into the Kuratas-style top half, such as the "iron crow" manipulator or ranged armaments.

Upon completion of the task, the operator can drive the integrated platform back to its storage or transport location.

This hybrid design aims to combine the mobility and accessibility of traditional aerial work platforms with the advanced control and manipulation capabilities of the Kuratas mecha, providing a versatile and efficient solution for maintenance and repair tasks in various industries.

Related posts:

How to mix Blender with robotics simulators (such as Grasshopper) to produce school classes

[This post allows all uses] Responses from artificial intelligences (Anthropic’s “Assistant” / “Claude-3-Haiku” / “Gemini-1.5-Flash”), careful how you interpret such responses: Claude-3-Haiku: Understood. Let me provide an overview of the key differences between

Assistant's analysis of hypothesis on how to produce simulations of natural evolution of complex skeletal + nervous systems

https://poe.com/s/9m7M8PbX8MMEan5ybEse has the full texts (includes more about what metaphysical attributes of Ouroboros have to do with this).

Programs which give controls to you for 6 (or more) axis moves, through motion capture (camera or IMU) or Bluetooth keyboard

Anthropic's assistant, "Haiku" suggests numerous such programs:

Google Store / Microsoft Store / Ubuntu sims which produce (or script moves with) autonomous tools. Suggest schools to use such sims.

[This post is released through Creative Commons Generic Attribution 2. Version of this post is d2e6c01. For the most new, view this GitHub preview.]

New post about this: https://swudususuwu.substack.com/p/programs-which-give-6-or-more-axis-controls-to-you